Sample Classification Predictions: Difference between revisions

(→PLSDA) |

|||

| Line 56: | Line 56: | ||

===Relation to model.classification=== | ===Relation to model.classification=== | ||

These classification prediction fields are described in the [[Standard Model Structure]] page, which identifies | These classification prediction fields are described in the [[Standard Model Structure]] page, which identifies | ||

:Class Pred Strict = model.classification.inclass | :Class Pred Strict = model.classification.inclass | ||

:Class Pred Most Probable = model.classification.mostprobable | :Class Pred Most Probable = model.classification.mostprobable | ||

:Class Pred Probability <ClassID> = model.classification.probability, column <ClassID> | :Class Pred Probability <ClassID> = model.classification.probability, column <ClassID> | ||

:Class Pred Member <ClassID> = model.classification. | :Class Pred Member <ClassID> = model.classification.inclasses, column <ClassID> based on the strict threshold value used when the model was generated | ||

===Example of Classification Predictions=== | ===Example of Classification Predictions=== | ||

Latest revision as of 09:30, 23 April 2024

Overview

This page describes viewing sample class predictions using the Scores Plot. In addition, an overall summary of the classification results can be seen from viewing the Confusion Matrix and Table available from the toolbar icon. These class predictions are based on predicted class probabilities for samples.

Viewing classification results for samples can be done through the Scores Plot for a PLSDA, SVMDA, KNN or SIMCA model. If the model has been applied to test data, predictions will also be available for those samples. The predictions for the calibration data are "self-predictions" (predictions for the model on the calibration data itself.)

Results can be viewed as a plot using the Plot Scores toolbar button in Analysis (or the plotscores command at the command line) and can be viewed as a table by selecting File > Edit Data from the Plot Controls window while viewing a scores plot, or by using the Edit Data toolbar button on the scores plot itself.

The predictions available are based on various classification rules, including the following (all rules are described in detail after the list) :

- Class Pred Strict - Numerical class assignment based on strict assignment rules.

- Class Pred Most Probable - Numerical class assignment based on most probable class rules.

- Class Pred Probability <ClassID> - Probability that the sample belongs to a specific class <ClassID>.

- Class Pred Member <ClassID> - Logical (true/false) class assignment to a specific class <ClassID> based on strict multiple-class assignment rules.

- Class Pred Member - unassigned - Logical (true/false) class assignment indicating when no class could be assigned to a sample.

- Class Pred Member - multiple - Logical (true/false) class assignment indicating when sample belongs to more than one class based on strict multiple-class assignment rules.

- Misclassified - Logical (true/false) indicating when the strict classification does not match the known "measured" class assignment.

While viewing a plot, the Plot Controls window allows selection and viewing of the different rule predictions. For example, setting the Plot Controls X selection to "Sample Number" and the Y selection to "Class Pred Most Probable" will show the most probable class for each sample in the Scores Plot. This is displayed as the numerical class number (for reference, this is the same number viewable in the class lookup table, if the model was built from a DataSet with classes). When selected, the Y axis ranges over all possible class numbers and a sample determined to belong to class = 2 would be shown at (x,y) = (sample number, 2).

If viewing the table of results, the columns of the table will be the different classification results and the rows the different samples. Note that this information is also available in the model or prediction structure itself in the field "classifications", as described in the Standard Model Structure page.

Class Pred Strict

Strict class predictions are based on the rule that each sample belongs to a class if the probability is greater than a specified threshold probability value for one and only one class. If no class has a probability greater than the threshold, or if more than one class has a probability exceeding it, then the sample is assigned to class zero (0) indicating no class could be assigned. These predictions provide the most safety in class assignment. If there is too large an uncertainty of a sample being a member of a class, or if the sample appears to be in more than one class, these predictions will indicate that. If samples are expected to belong to more than one class, use the Class Pred Member predictions (described below). The threshold value is specified for classification methods by the option strictthreshold, with a default value of 0.5.

Use strict class predictions if you need to see a class assignment for each sample where the model is confident the sample belongs to this class and to this class only.

Class Pred Most Probable

Most probable predictions are based on choosing the class that has the highest probability regardless of the magnitude of that probability. Note this differs from Strict class predictions because if more than one class has > 0.50 probability, the highest probability will "win" the sample. Likewise, if all probabilities are below 0.50, the largest probability still "wins".

Use these predictions if you need to see a single class assignment for each sample and are not concerned with the absolute probability of the classes. This might be the case when a model has been built on only a few example samples for each class, when samples have been pre-screened as being in one of the classes modeled, or when "no class" has no meaning.

There is always a most likely class for a sample to belong to but it is possible that the sample is not well modeled and has low probabilities for all classes. Or it is possible that two classes are similar and a sample belonging to one of them will also have a high predicted probability of belonging to the second class. In these situations it may be more useful to use the Strict class predictions.

Class Pred Probability <ClassID>

The predicted probability that a sample belongs to a particular class is a method-dependent calculation as described in Class Probability Calculation, but in general is calculated such that a sample belonging to this class will have value closer to 1. Otherwise, it will be closer to 0. There will be a separate probability calculated for each class, and the class will be named in the description. For example the class named <ClassID>, is available under the label "Class Pred Probability <ClassID>".

These predictions are useful when you need to report a confidence of assignment or need to derive special rules for class assignment.

Class Pred Member <ClassID>

Class member predictions are reported as true/false for each class (<ClassID>) and are similar to the strict class predictions described earlier. A sample will be indicated as a member of a class if and only if the predicted probability for the given class is > 0.50. However, there is no restriction that a sample be assigned to one and only one class. As a result, a sample may be a member of more than one class if each class's probability is > 0.50.

These predictions should be used when an analysis permits a sample to belong to more than one class, or to no classes. That is, when the classes being predicted are not exclusionary for each other. For example, a model that reports both the water solubility of a compound (is or is not water soluble), and whether or not that compound is organic (organic vs. inorganic) should allow all combinations of both organic/inorganic and soluble/insoluble without exclusion.

Class Pred Member - unassigned

The predictions for "Class Pred Member - unassigned" identify samples which were not assigned to any class because no predicted probability was greater than 0.5.

Class Pred Member - multiple

The predictions for "Class Pred Member - multiple" identify samples which were assigned to more than one class with predicted probability was greater than 0.5.

Misclassified

Misclassified predictions identify samples where the predicted "Class Pred Strict" does not agree with the sample's actual class. For SIMCA and PLSDA the actual class could include more than one class and the sample is misclassified if its "Class Pred Member <ClassID>" do not correctly predict the actual class(es). If the sample's actual class is unknown then the sample will not be identified as as misclassified.

Relation to model.classification

These classification prediction fields are described in the Standard Model Structure page, which identifies

- Class Pred Strict = model.classification.inclass

- Class Pred Most Probable = model.classification.mostprobable

- Class Pred Probability <ClassID> = model.classification.probability, column <ClassID>

- Class Pred Member <ClassID> = model.classification.inclasses, column <ClassID> based on the strict threshold value used when the model was generated

Example of Classification Predictions

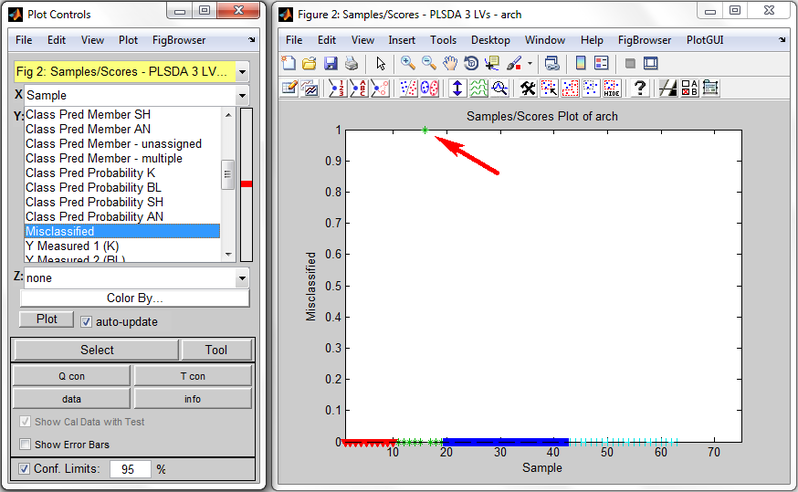

Shown below is an example Scores Plot from PLSDA run on the arch dataset. In the Plot Controls window (on left) are shown some of the classification predictions which may be plotted. The X menu is set to "Sample" and the Y menu is set to "Misclassified". The Scores Plot shows that all X samples have value 0 (NOT misclassified) except for one sample, the 16th, which has value 1, indicating it is misclassified. Looking at the "Class Pred Most Probable" predictions shows this sample is correctly predicted as belonging to class 2 ("BL"). Looking at "Class Member Pred K" and "Class Member Pred BL" both show sample 16 belonging, meaning that sample 16 belongs to each of these classes with probability > 0.5. Sample 16 actually only belongs to class "BL", however, as shown by Y="Class Measured 2 (BL)", and therefore it is considered to be misclassified. Note that none of the unknown class samples (samples 64-75) are marked as misclassified.

Class Probability Calculation

Calculating the probability that a sample belongs to each possible class is done differently for each of the classifier methods, PLSDA, SVMDA, KNN, and SIMCA. These methods are described here. In all cases the class probabilities are saved as model.classification.probability, an nsamples x nclasses array. The columns are the classes, in order as given in model.classification.classnums (also see model.classification.classids).

PLSDA (and LREGDA, ANNDA, ANNDLDA)

PLSDA calculates the probability of each sample belonging to each possible class. The calculation of probabilities for a particular class ‘A’ involves examining the predicted y values from the PLSDA model for all samples, then fitting a Gaussian distribution to the predictions for class ‘A’ samples and another Gaussian distribution to all samples which are not class ‘A’. The class ‘A’ probability distribution function then gives the probability of any sample belonging to class ‘A’ from its predicted y value. This method is described in more detail at : | How is the prediction probability and threshold calculated for PLSDA?.

This is done for each possible class in turn and the results are saved in PLSDA models as model.detail.predprobability.

Side Note: The predicted y values used in these binary classification problems for "class A" versus "not class A" can be viewed in the Scores Plot where they are listed in the Plot Controls as "Y Predicted 1 (class 1 name)", "Y Predicted 2 (class 2 name)", etc. It is useful to view these plotted against "Sample" for example. The discriminant threshold value value is also plotted in these plots (see the link above for more details). The threshold values for these class binary classification problems are stored in model.detail.threshold, one value per class.

SVMDA

The LIBSVM library calculates the probabilities of each sample belonging to each possible class if the "Probability Estimates" option is enabled (default setting) in the SVMDA analysis window (or if the probabilityestimates option is set equal to 1 (default value) in command line usage). The method is explained in [1], section 8, "Probability Estimates". The method first calculates one-against-one pairwise class probabilities as a function of decision value, using the training data. The class probabilites are then found as an optimization problem using these k*(k-1)/2 pairwise probabilities (assuming there are k classes).

KNN

KNN models include the probabilities of each sample belonging to each possible class in the model.classification.probability field. The probability that a sample belongs to class ‘A’ is calculated as the the fraction of nearest-neighbors which have that particular class.

SIMCA

SIMCA calculates the probability of each sample belonging to each possible submodel class or class group. The calculation of a sample's probability of belonging to a particular submodel class, say class 'A', involves examining the Q and/or T^2 statistics for the sample in that sub-model. This is done for each possible class and the results are saved in the SIMCA model as model.classification.probability.

The calculation of class probability for a sample is done based on the "rule" option in the SIMCA model, the Q and/or T^2 for the sample, and an estimation of the T^2 and Q distributions from the calibration data.

First, the confidence level corresponding to the sample's observed Q and/or T^2 is estimated from the distributions in the calibration data. Next, one of two methods are used to calculate probability:

- For decision rules "Q", "T2", or "Both": the confidence limits estimated for the Q and T^2 statistics are both converted into probabilities using:

class_probability = (1-C)/(1-D)*0.50.

- where C is the confidence level determined from either of the statistics and D is the decision limit threshold defined in the SIMCA model's options (options.rule.limit.t2 or options.rule.limit.q). Thus, a sample which has a confidence level determined to be at the decision limit will be assigned a probability of 50%. If the "Q" or "T2" rules are use, the corresponding probability is reported. If the "Both" rule is used, the lower probability calculated from the Q and T^2 statistics is reported.

- For decision rule "combined": the combined statistic, defined as: sqrt( (Q)^2 + (T^2)^2 ), is used along with the empirically-determined distribution of confidence levels from Q and T^2 to interpolate a probability. In this case, a confidence level of 2D-1 (e.g. which would be =0.9 when D=0.95) is assigned 100% probability and a confidence level of 0.999 (or (D+1)/2, whichever is larger) is assigned as 0% probability.

- For example, with decision limit threshold at 0.95 (= 50% probability), a confidence level of 0.98 corresponds to an in-class probability of 20% whereas a confidence level of 0.92 corresponds to a 70% in-class probability.