SVM Function Settings: Difference between revisions

imported>Scott No edit summary |

|||

| (9 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

==Support Vector Machines == | ==Support Vector Machines == | ||

SVMs are non-linear models which can be used for regression or classification problems. The following settings | SVMs are non-linear models which can be used for regression or classification problems. The following settings may be accessed from the [[Working With Options|Options GUI]]. For SVMDA, the probability estimates can be adjusted from the Function Settings panel. | ||

<br> | <br> | ||

| Line 9: | Line 9: | ||

==SVM Type== | ==SVM Type== | ||

===Classification (SVMDA)=== | ===Classification (SVMDA)=== | ||

* '''Nu-SVM''' optimizes a model with an adjustable parameter Nu | * '''Nu-SVM''' optimizes a model with an adjustable parameter Nu (0 -> 1] which indicates the upper bound on the number of misclassifications allowed. | ||

* '''C-SVC''' optimizes a model with an adjustable cost function C [0 -> inf] which indicates how strongly misclassifications should be penalized. | * '''C-SVC''' optimizes a model with an adjustable cost function C [0 -> inf] which indicates how strongly misclassifications should be penalized. | ||

===Regression (SVM)=== | ===Regression (SVM)=== | ||

(shown above) | |||

* '''Epsilon-SVR''' optimizes a model using the adjustable parameters epsilon (upper tolerance on prediction errors) and C (cost of prediction errors larger than epsilon.) | * '''Epsilon-SVR''' optimizes a model using the adjustable parameters epsilon (upper tolerance on prediction errors) and C (cost of prediction errors larger than epsilon.) | ||

* '''Nu-SVR''' optimizes a model using the adjustable parameter Nu | * '''Nu-SVR''' optimizes a model using the adjustable parameter Nu (0 -> 1] which indicates a lower bound on the number of support vectors to use given as a fraction of total calibration samples. | ||

==Kernel Type== Two kernel types are supported, Linear and Radial Basis Function (RBF). Linear kernel has no parameters but the RBF has a ‘gamma’ parameter (> 0). | ==Kernel Type== | ||

Two kernel types are supported, Linear and Gaussian Radial Basis Function (RBF). Linear kernel has no parameters but the RBF has a ‘gamma’ parameter (> 0). This parameter if available through the [[Working With Options|Options GUI]]. | |||

==Compression== X-block data may be compressed by applying PCA or PLS to the data to reduce the number of X-block variables. SVM is then applied to the resulting scores values. The number of latent variables (principal components) used in the compression model must be supplied. The compression model is included as part of the SVM model and will be automatically applied to new data. | ==Compression== | ||

X-block data may be compressed by applying PCA or PLS to the data to reduce the number of X-block variables. SVM is then applied to the resulting scores values. The number of latent variables (principal components) used in the compression model must be supplied. The compression model is included as part of the SVM model and will be automatically applied to new data. | |||

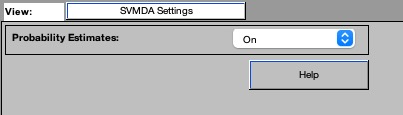

==Probability Estimates== For a given sample, estimates the per-class probability of the sample’s class membership. Results can be seen in the Scores Plot. This feature is only available for classification (SVMDA) type models. | ==Probability Estimates== | ||

For a given sample, estimates the per-class probability of the sample’s class membership. Results can be seen in the Scores Plot. This feature is only available for classification (SVMDA) type models. | |||

==Cross-validation== Performs n-fold cross validation. Data are randomly divided into n groups. Each group is excluded in turn and an svm trained on the remaining groups (which are separately preprocessed) and validated against the excluded group. Note that cross-validation results from this algorithm can be notably different from the cross-validation results from other model types and care should be taken in comparing results. It is strongly recommended that a separate validation set be used for comparison to other model types. | ==Cross-validation== | ||

Performs n-fold cross validation. Data are randomly divided into n groups. Each group is excluded in turn and an svm trained on the remaining groups (which are separately preprocessed) and validated against the excluded group. Note that cross-validation results from this algorithm can be notably different from the cross-validation results from other model types and care should be taken in comparing results. It is strongly recommended that a separate validation set be used for comparison to other model types. | |||

Latest revision as of 09:03, 5 October 2023

Support Vector Machines

SVMs are non-linear models which can be used for regression or classification problems. The following settings may be accessed from the Options GUI. For SVMDA, the probability estimates can be adjusted from the Function Settings panel.

SVM Type

Classification (SVMDA)

- Nu-SVM optimizes a model with an adjustable parameter Nu (0 -> 1] which indicates the upper bound on the number of misclassifications allowed.

- C-SVC optimizes a model with an adjustable cost function C [0 -> inf] which indicates how strongly misclassifications should be penalized.

Regression (SVM)

(shown above)

- Epsilon-SVR optimizes a model using the adjustable parameters epsilon (upper tolerance on prediction errors) and C (cost of prediction errors larger than epsilon.)

- Nu-SVR optimizes a model using the adjustable parameter Nu (0 -> 1] which indicates a lower bound on the number of support vectors to use given as a fraction of total calibration samples.

Kernel Type

Two kernel types are supported, Linear and Gaussian Radial Basis Function (RBF). Linear kernel has no parameters but the RBF has a ‘gamma’ parameter (> 0). This parameter if available through the Options GUI.

Compression

X-block data may be compressed by applying PCA or PLS to the data to reduce the number of X-block variables. SVM is then applied to the resulting scores values. The number of latent variables (principal components) used in the compression model must be supplied. The compression model is included as part of the SVM model and will be automatically applied to new data.

Probability Estimates

For a given sample, estimates the per-class probability of the sample’s class membership. Results can be seen in the Scores Plot. This feature is only available for classification (SVMDA) type models.

Cross-validation

Performs n-fold cross validation. Data are randomly divided into n groups. Each group is excluded in turn and an svm trained on the remaining groups (which are separately preprocessed) and validated against the excluded group. Note that cross-validation results from this algorithm can be notably different from the cross-validation results from other model types and care should be taken in comparing results. It is strongly recommended that a separate validation set be used for comparison to other model types.